What is Infernet?

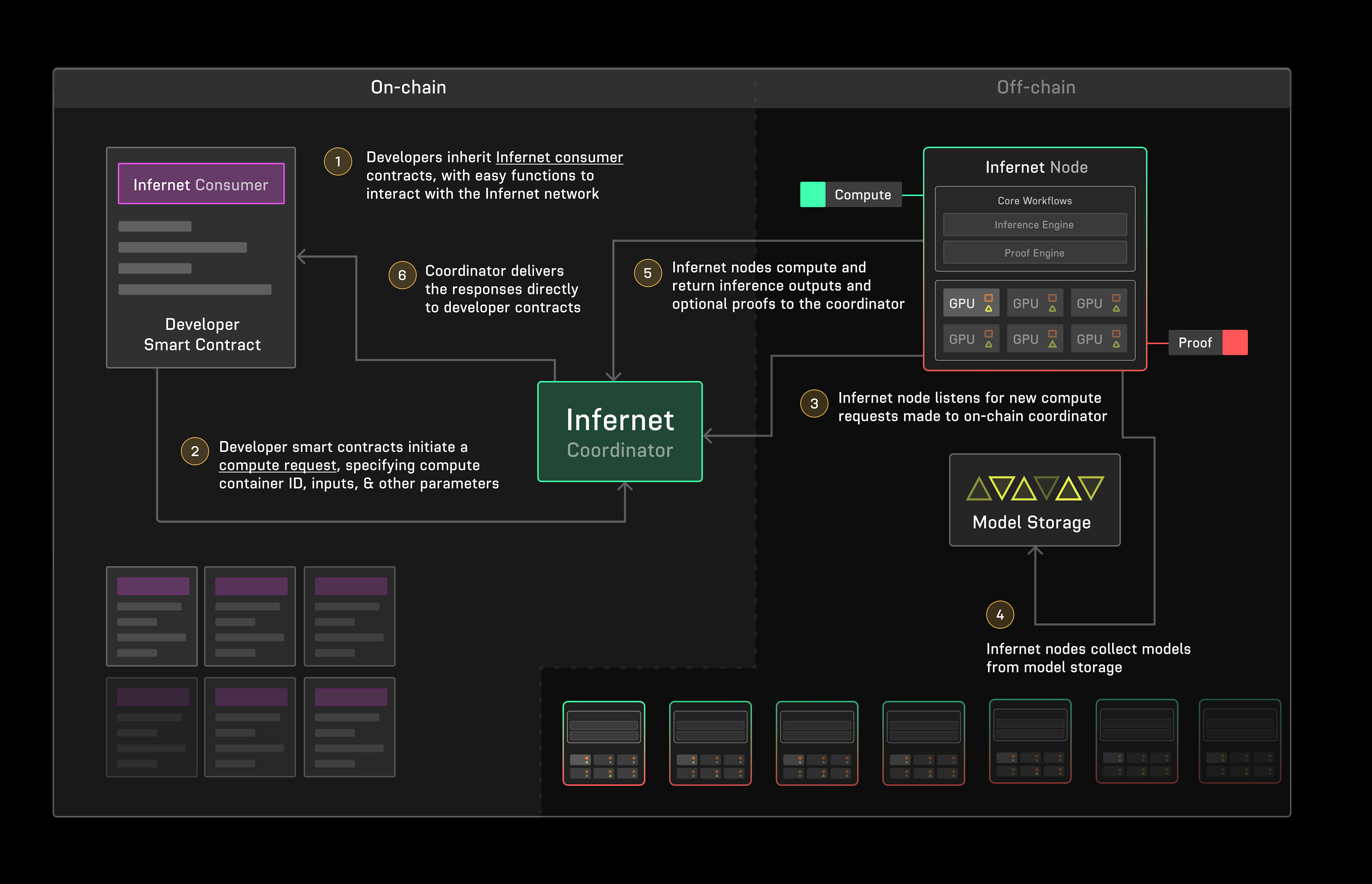

Infernet is a lightweight framework to bridge off-chain compute on-chain. Primarily, it equips smart contract developers with a way to request computation to be done off-chain from Infernet Nodes and delivered to their consuming on-chain smart contracts via the Infernet SDK.

As the name implies, the primary application of Infernet is in servicing ML Inference workloads. Users can build and host ML models, deploy them to Infernet nodes, and create subscriptions to consume inference outputs and optional succinct execution proofs (for classical ML models that fit within a circuit) from smart contracts on-chain.

Simple yet flexible

Infernet is built to be simple. To that extent, we forgo things like coordinating validators through consensus, in favor of first iterating and validating with builders on their novel use-cases.

Today, Infernet can:

- Host arbitrary compute workloads via containers

- Allow users to run various ML models via infernet-ml (opens in a new tab)

- Be consumed through easy interfaces on-chain via the Infernet SDK

- Be used for both on-chain and off-chain compute processing via the Infernet Node

- Support on-chain payments. Node operators can be paid for their compute workloads, and smart contract developers can pay for the compute they consume

- Be permisionlessly joined by any builder, smart contract developer, or node operator

Head over to Overview for a high-level view of Infernet's architecture and capabilities.

What services are available?

Infernet 1.0.0 comes with a set of pre-built services that can be used out-of-the-box to run various common ML tasks.

These are quite useful for web3 developers who want to quickly get started with ML on-chain. The services are:

CSS Inference Service(opens in a new tab): Serves closed source models, e.g.OpenAI'sGPT-4.ONNX Inference Service(opens in a new tab): Serves ONNX (opens in a new tab) models.TGI Inference Client Service(opens in a new tab): Uses Huggingface's TGI Client (opens in a new tab) to generate text completions.Torch Inference Service(opens in a new tab): Serves any Torch (opens in a new tab) model. It comes with built-in support for allscikit-learnmodels.Huggingface Inference Client Service(opens in a new tab): Uses Huggingface's Inference Client (opens in a new tab) to support various Huggingface tasks (opens in a new tab).

New Features in Infernet 1.0.0

Payment System

Infernet now supports on-chain payments! Node operators can be paid for their compute workloads, and smart contract developers can pay for the compute they consume. Node operators & smart contract developers can register an on-chain Infernet wallet to pay/receive payments.

A detailed explainer on the payment system can be found here.

Proofs

Infernet's contracts now provide a framework for verification of compute. Consumers can request proofs of computation from the nodes, which can be verified on-chain. Nodes can provide proofs of computation, which can be verified by consumers on-chain.

Infernet remains un-opinionated about the proof system, and allows for any proof system to be used. Consumers specify a verifier contract, which will verify the proof. Node operators can choose to provide proofs of a certain computation, which would have to be verified by the verifier contract.

A detailed explainer on the proof system can be found here.

Streaming Responses

Infernet Nodes now support streaming responses. This helps power chatbots, and other off-chain applications that require real-time responses.

Show me some code

Want to dive right in? Check out Ritual Learn (opens in a new tab) for hands-on step-by-step tutorials & videos on bringing a variety of ML models into your smart contracts:

- Prompt to NFT (opens in a new tab): A tutorial on using Infernet to create & mint an NFT generated by stable diffusion from a prompt.

- Running a Torch Model (opens in a new tab): We import a Torch model,

and use Infernet ML (opens in a new tab)'s workflows to invoke it either from a

smart contract or from

infernet-node's REST API. - Running an ONNX Model (opens in a new tab): Similar to the Torch tutorial, we do the same with an ONNX model.

- TGI Inference (opens in a new tab): A tutorial on running

Mistral-7bon Infernet, and optionally delivering its output to a smart-contract. - GPT-4 Inference (opens in a new tab): A tutorial on how to use our CSS Inference (opens in a new tab) workflow to integrate with OpenAI's completions API.